Often when working with CX pros and and teams, the discussion of common CX metrics i.e. CSAT, OSAT, CES and NPS (a.k.a. LTR) comes up. Usually, this resembles a bit of a religious argument that often leads to an understanding that, although NPS is not the most “actionable”, “sensitive” or “reliable” measurement of a customer’s or visitor’s experience, “it’s the one we have to use here”.

That’s fine. But we need to discuss when to use it.

When it comes to “listening” to the voice of the customer, we have started to develop segments based on how input is received. We are starting to recognize Direct, Indirect and Inferred (more on these channels in a future post)”feedback” as the various ways customers, visitors, citizens, constituents, stakeholders provide their…err…thoughts. We (or at least I) can’t seem to get away from using “feedback” as a the category header, when in reality, the use of feedback in a well-designed cx program should be used deliberately with a very specific use case.

So what does all this have to do with When and When Not to ask the NPS Question? Everything!

Sometimes there is a misconception that if you’re asking customers to provide feedback through an opt-in mechanism (a.k.a. site badge, the word FEEDBACK housed in a right-margin tab or link at the bottom of the page), you are “measuring” the customer experience. There is actually a critical difference between feedback and measurement. Do you know what it is? Do you know why it matters?

When we talk about feedback vs. measurement it really boils down to being reactive v. proactive, and how you (the business) responds to that input.

We can start by looking at feedback. It is necessary and important and can do some good because it gives you something to react to (broken links, missing items, missing pages, etc.) – things you can, and should, act on. However, feedback is purely reactive because it’s easier to react to complaints than it is to proactively identify and measure potential problems.

Feedback isn’t inherently bad – any voice of customer information is good and can be helpful – but it does tend to be anecdotal and biased.

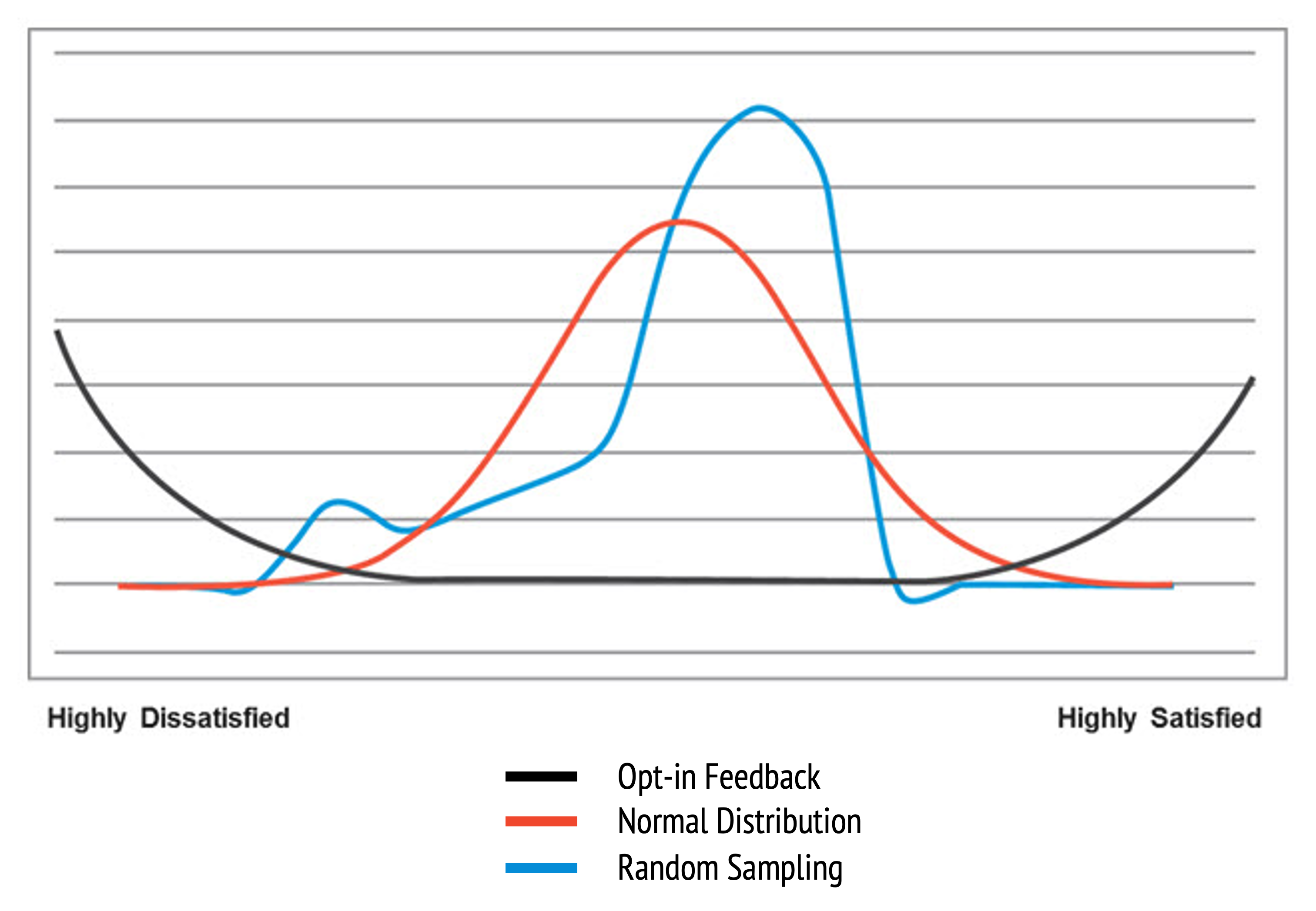

For years, I have noticed that the data consistently shows, time and time again, that people are more likely to speak up when they have a bad experience, less likely when they have a great experience, and hardly ever when they’re somewhere in the middle. When’s the last time someone reached out to your company just to say you’re doing an okay job?

Take a look at the graph below.

What does this mean? It’s both simple and scary. There’s this silent majority that usually makes up the biggest part of your business and drives, in large part, the success of your business that often goes unheard. This group can quietly kill your company if you’re not meeting their expectations and needs. Collecting a “randomized sample” is a great way to facilitate a form of Direct input from those you serve, that can be predictive when done right.

You can certainly identify some of the issues people are running into when they traverse your digital properties by leveraging Feedback tools, and that is the “use case” for Feedback. It’s not “measurement” rather a “find and fix” solution and should be used with that in mind.

The moral of the story is “think twice” when you want to, or you are being pressured to ask the “NPS Question” through a feedback mechanism. You may not get the results you want.

High-performing CX programs drive desired business outcomes. It’s not just having the tech stack, it’s how you do it.